This great procedure makes it easy to remember what variables are related to in R. One of the troubles with exploratory data analysis is that when one has a lot of variables it can be confusing what the variable was created for originally. Certainly code comments can help but that makes the files larger and unwieldy in some cases. One solution for that is to add comment fields to the objects created so that we can query the object and see a description. So, for example, we could create a time series called sales_ts, and then create a window of that, called sales_ts_window_a, and another called sales_ts_window_b, and so on for several unique spans of time. As we move through the project we could have created numerous other variables and subsets of those variables. We can see the details of those by using head() or tail(), but that may not be an extremely useful and clear measure.

To that end, these code segments allow applying a descriptive comment to an item and then querying that comment later via a describe command.

example_object <- "I appreciate r-cran."

# This adds a describe attribute/field to objects that can be queried.

# Could also change to some other attribute/Field other than help.

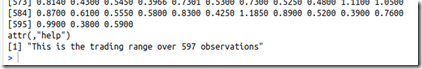

describe <- function(obj) attr(obj, "help")

# to use it, take the object and modify the "help" attribute/field.

attr(example_object, "help") <- "This is an example comment field."

describe(example_object)

The above example refers to an example object, that could easily be sales_ts_window_a mentioned above. So we would use the attribute command to apply our description to sales_ts_window_a.

attr(sales_ts_window_a, "help") <- "Sales for the three quarters Jan was manager"

attr(sales_ts_window_b, "help") <- "Sales for the five quarters Bob was manager"

After hours or days have passed and there are many more variables under investigation, a simple query reveals the comment.

describe(sales_ts_window_a)

[1] "Sales for the three quarters Jan was manager"

This might seem burdensome, but RStudio makes it very easy to add this via code snippets. We can create two code snippets. The first is the one that goes at the top of the file which defines the describe function that we use to read the field we apply to the comment to. Open RStudio Settings > Code > Code Snippets and add the following code. RStudio requires tabs to indent these.

snippet lblMaker

#

# Code and Example for Providing Descriptive Comments about Objects

#

example_object <- "I appreciate r-cran."

# This adds a describe attribute/field to objects that can be queried.

# Could also change to some other attribute/Field other than help.

describe <- function(obj) attr(obj, "help")

# to use it, take the object and modify the "help" attribute/field.

attr(example_object, "help") <- "This is an example comment field."

describe(example_object)

snippet lblThis

attr(ObjectName, "help") <- "Replace this text with comment"

Now one can use the code completion to add the label maker to the top of the script. Simply start typing lblMak and hit the tab key to complete the code snippet. When wanting to label an object for future examination, start typing lblTh and hit tab to complete it and replace the objectname with the variable name and replace the string on the right with the comment. These code snippets provide a valuable way to store descriptive information about variables as they are created and set aside with potential future use.

This functionality does overlap with the built in comment functionality with a bit of a twist. The description added via this method appears at the end of the print output when typing the variable name. The built in comment function does not print out. It is also less intuitive than describe() and receiving a description.

R contains a built in describe command, but it often is not useful. Summary is the one I use most often. For a good description, I import the psych package and use psych::describe(data). Because of that, the describe method in this article is very useful. The printout appears like below with the [1]…

Adding attributes other than “help” could easily be accomplished. DescribeAuthor, DescribeLocation, and other functions could be added. When using a console to program, a conversational style makes it flow better.